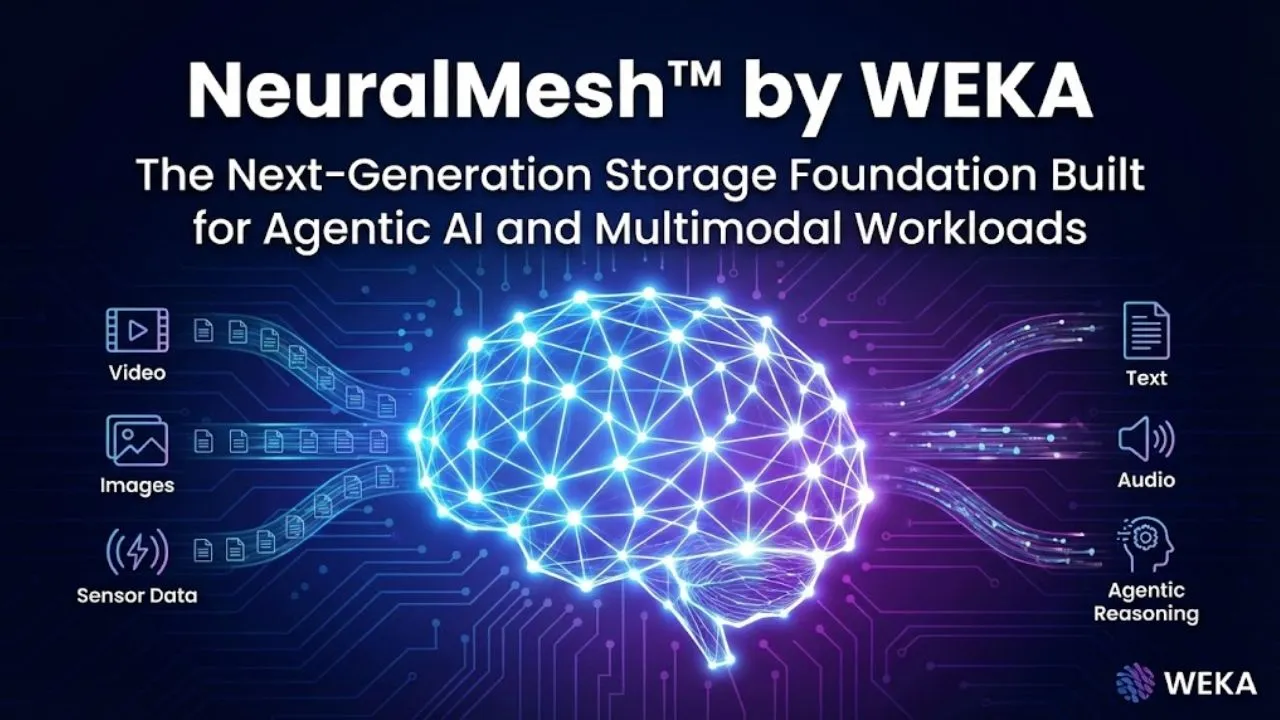

The rapid rise of agentic AI, multimodal models, and real-time reasoning is fundamentally changing infrastructure requirements. Modern AI systems now demand:

- microsecond-level latency to keep GPUs saturated

- exabyte-scale capacity with predictable performance

- seamless orchestration across edge, core, cloud, and “neocloud” environments

- zero architectural lock-in so infrastructure can evolve at the speed of AI innovation

Legacy storage architectures—designed for traditional enterprise workloads—struggle with the small-file I/O patterns, massive metadata overhead, and extreme throughput needs of today’s frontier AI training and inference pipelines. That’s why WEKA built NeuralMesh™: a complete re-architecture of distributed storage, purpose-built to accelerate AI at every layer of the stack.

NeuralMesh™ is not an incremental improvement. It is an intelligent, adaptive mesh that gets faster, more resilient, and more efficient the larger it grows.

Five Foundational Components Working in Concert

- NeuralMesh™ Core The resilient, self-optimizing foundation.

- Intelligently balances I/O across all nodes to eliminate hotspots

- Automatically utilizes idle GPU resources for background tasks

- Provides flexible failure domains and auto-healing for high availability and durability

- Scales linearly in performance and capacity without degradation

- NeuralMesh™ Accelerate The performance engine that removes every traditional storage bottleneck.

- Creates direct, ultra-low-latency paths between data and applications

- Intelligently distributes metadata across the entire cluster

- Combines memory-speed and flash-speed tiers into a single, unified pool

- Delivers consistent microsecond response times — even under the most punishing small-file and metadata-intensive AI workloads

- NeuralMesh™ Deploy Future-proof deployment flexibility.

- Run the same software stack and achieve identical performance characteristics across edge, core, public cloud, private cloud, or hybrid “neocloud” environments

- No vendor lock-in, no forced architectural rip-and-replace when requirements change

- Supports containerized, bare-metal, and virtualized deployments

- NeuralMesh™ Observe Intelligent operations and observability hub.

- Provides granular, real-time visibility into multi-tenant AI clusters

- Customizable dashboards, proactive alerting, and event correlation

- Helps operators optimize resource utilization and quickly diagnose anomalies

- NeuralMesh™ Enterprise Services The enterprise-grade foundation layer.

- End-to-end encryption at rest and in flight

- Advanced data reduction (compression + deduplication)

- Robust snapshots, replication, and ransomware protection

- Compliance-ready auditing and security controls

Why NeuralMesh™ Matters for Agentic & Multimodal AI

Agentic systems continuously reason, plan, and act—often in real time—while multimodal models ingest and generate text, images, video, audio, and sensor streams simultaneously. These workloads create extreme demands:

- Billions of tiny metadata operations per second

- Massive parallel read/write streams to keep thousands of GPUs fed

- Low-latency access whether data lives at the edge or in a central cloud region

- Rapid infrastructure elasticity as model sizes and training runs scale unpredictably

NeuralMesh™ addresses every one of these pain points. By eliminating legacy storage choke points, it makes GPUs run faster, training epochs complete sooner, and inference latency drop dramatically. The system doesn’t just store data — it actively accelerates the entire AI pipeline.

Read Also: Deep Blue vs. Garry Kasparov: The 1997 Chess Match That Proved Machines Could Outthink Humans

The Bottom Line

NeuralMesh™ by WEKA is more than high-performance storage. It is an accelerant for AI innovation — a foundation that delivers:

- Faster time-to-results for your teams and clients

- Faster business outcomes and ROI

- Faster innovation cycles that keep you ahead in the agentic AI era

If your organization is building or deploying frontier AI at scale, legacy storage will hold you back. NeuralMesh™ is engineered to propel you forward.

The foundation for enterprise and agentic AI innovation.

Disclaimer: This article is a structured summary based on the provided promotional text describing NeuralMesh™ by WEKA. All claims regarding performance, features, architecture, and benefits are taken directly from the source material and reflect WEKA’s stated positioning as of February 2026. Actual product capabilities, benchmarks, and availability should be verified directly through official WEKA documentation, datasheets, or contact with WEKA sales/engineering teams. This is not an independent review, technical validation, or endorsement.