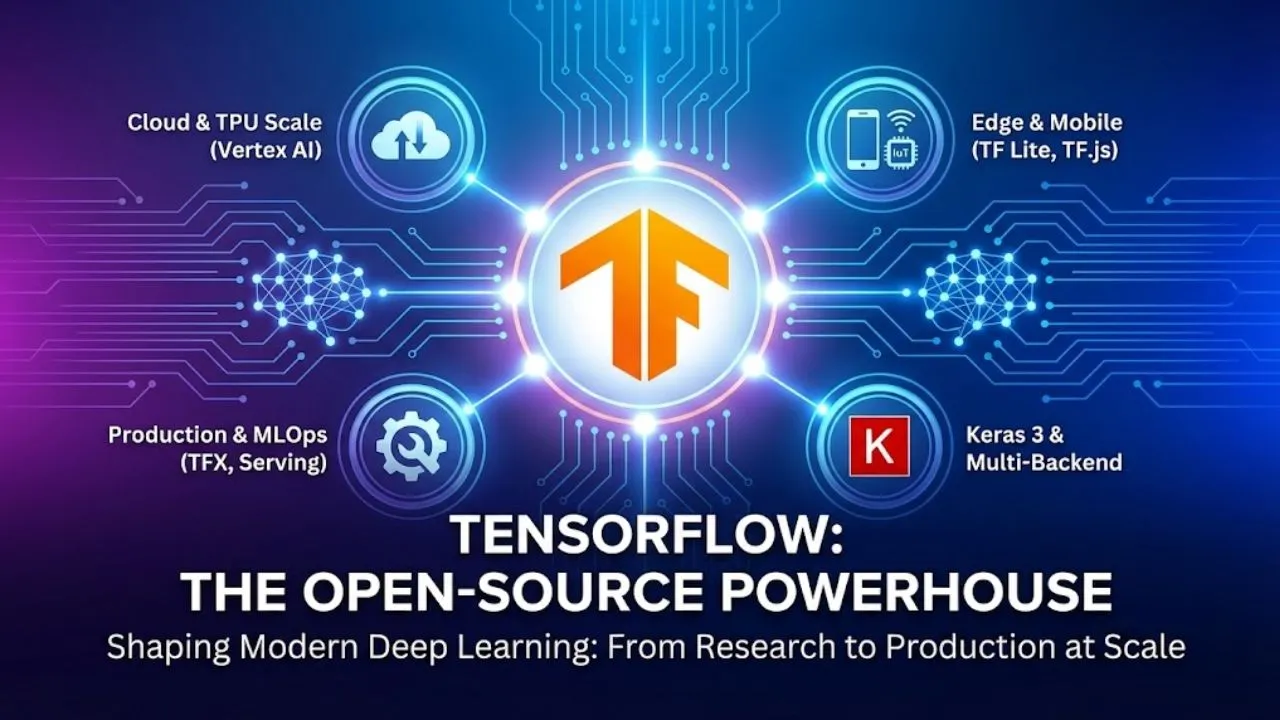

When people talk about the explosion of deep learning and AI since 2015, one name almost always comes up first: TensorFlow. Released by Google in November 2015 as an open-source machine learning framework, TensorFlow quickly became the de facto standard for building, training, and deploying neural networks at scale.

Even in 2026 — with PyTorch dominating research papers and JAX gaining traction in high-performance computing — TensorFlow remains one of the most widely deployed frameworks in production environments worldwide. From mobile apps to massive cloud clusters, TensorFlow powers real-world AI every day.

A Brief History: From Google Brain to Ecosystem Leader

- 2015 — TensorFlow 0.5 released under Apache 2.0 license. Google open-sources what was originally an internal tool (DistBelief successor).

- 2017 — TensorFlow 1.0 → production stability, Keras integration as official high-level API.

- 2019 — TensorFlow 2.0 → eager execution by default, massive API cleanup, end-to-end Keras focus.

- 2020–2022 — TensorFlow Lite (mobile/edge), TensorFlow.js (browser), TensorFlow Quantum (hybrid quantum-classical), TFX (end-to-end MLOps).

- 2023–2026 — TensorFlow 2.10+ series: better multi-GPU/TPU support, improved XLA compilation, tighter integration with Keras 3 (multi-backend), native support for newer hardware (AMD ROCm, Apple Silicon Metal, Intel oneAPI), and growing GenAI tooling (transformers, diffusion models, LLM fine-tuning helpers).

Today TensorFlow is maintained by Google and a huge open-source community — with more than 200,000 GitHub stars and millions of monthly downloads.

Core Strengths of TensorFlow in 2026

| Strength | What It Means in Practice | Why It Still Wins in Production |

|---|---|---|

| Production-First Design | TFX (TensorFlow Extended), TensorFlow Serving, TensorFlow Lite, TensorFlow.js | Trusted for large-scale, regulated deployments |

| Keras 3 | Single API that runs on TensorFlow, PyTorch, JAX backends | Write once, deploy anywhere |

| Ecosystem Depth | TFX, TensorBoard, TensorFlow Hub, TensorFlow Datasets, TensorFlow Probability, TF-DF | Full MLOps + research in one stack |

| Hardware Acceleration | Native TPU support, XLA compiler, CUDA/ROCm/Metal/DirectML, oneDNN optimizations | Best-in-class performance on Google Cloud TPUs |

| Edge & Mobile | TensorFlow Lite Micro, TensorFlow Lite for Microcontrollers, delegate support (GPU/NPU) | Runs on phones, IoT, embedded devices |

| Browser & JavaScript | TensorFlow.js — train & infer directly in the browser or Node.js | Web-based AI apps, interactive demos |

| Explainability & Privacy | TF Privacy (DP-SGD), TF Encrypted, What-If Tool, Model Card Toolkit | Critical for finance, healthcare, government |

Real-World Use Cases in 2026

- Recommendation Systems — YouTube, Google Play, Spotify-like personalization at scale

- Computer Vision — Google Photos (object detection, face clustering), Waymo (perception stack)

- Natural Language Processing — Google Translate backend, BERT/T5 fine-tuning pipelines

- Healthcare — Medical image segmentation, predictive diagnostics, federated learning on patient data

- Finance — Fraud detection, algorithmic trading, credit risk models with strong governance

- Manufacturing & IoT — Predictive maintenance, anomaly detection on factory sensor streams

- Mobile & Edge AI — On-device speech recognition, live filters, OCR in camera apps

- Scientific Research — Climate modeling, protein folding support, quantum ML experiments

Quick Code Example (TensorFlow + Keras 3 in 2026 Style)

Python

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

# Simple CNN for image classification (MNIST example)

model = keras.Sequential([

layers.Input(shape=(28, 28, 1)),

layers.Conv2D(32, 3, activation='relu'),

layers.MaxPooling2D(),

layers.Conv2D(64, 3, activation='relu'),

layers.MaxPooling2D(),

layers.Flatten(),

layers.Dense(128, activation='relu'),

layers.Dropout(0.5),

layers.Dense(10, activation='softmax')

])

model.compile(

optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy']

)

# Load and prepare data

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data()

x_train = x_train[..., tf.newaxis].astype("float32") / 255.0

x_test = x_test[..., tf.newaxis].astype("float32") / 255.0

# Train

model.fit(x_train, y_train, epochs=10, validation_data=(x_test, y_test))

# Export to SavedModel (for serving)

model.export("mnist_model")Why TensorFlow Remains a Top Choice in 2026

- Unmatched production maturity — battle-tested at planetary scale

- Keras 3 multi-backend — write code once, run on TF, PyTorch, or JAX

- Google ecosystem synergy — TPUs, Vertex AI, BigQuery ML, Colab, TensorFlow Extended

- Edge-to-cloud continuum — same model code runs from microcontrollers → browser → TPU pod

- Strong governance story — lineage tracking, model cards, differential privacy tools

Read Also: OpenCV: The Most Powerful Open-Source Computer Vision Library in the World

Final Verdict

TensorFlow is no longer “the Google framework” — it’s the production-grade backbone of serious AI deployments. If your team needs to ship reliable, scalable, auditable models — especially in regulated industries or at massive scale — TensorFlow (with Keras 3) is still one of the safest, most complete choices available.

PyTorch may win the research beauty contest, but when it’s time to actually run AI in the real world, TensorFlow quietly powers more of it than most people realize.

Disclaimer: This article is an educational overview of TensorFlow based on official documentation, GitHub activity, TensorFlow blog posts, and community usage patterns as of February 2026. Features, performance, and ecosystem integrations can change with new releases. Always refer to tensorflow.org, the official TensorFlow GitHub, or Google Cloud Vertex AI documentation for the latest information, tutorials, and installation instructions.